Introduction to lamatrix: Getting started.#

lamatrix is a tool to help you fit linear models to data. This tool is designed to make it easier to build models and fit them.

These are the basic principles behind lamatrix.

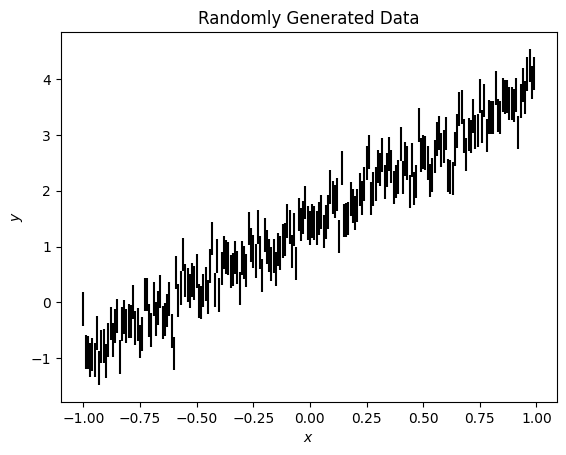

Firstly, let’s create some data.

[1]:

import numpy as np

from astropy.io import fits

import matplotlib.pyplot as plt

[2]:

x = np.arange(-1, 1, 0.01)

y = x * 2.3459 + 1.435 + np.random.normal(0, 0.3, size=len(x))

ye = np.ones(len(x)) * 0.3

[6]:

fig, ax = plt.subplots()

ax.errorbar(x, y, ye, ls='', c='k')

ax.set(xlabel='$x$', ylabel='$y$', title='Randomly Generated Data');

I have generated some synthetic data with the relation

\(y = mx + c\)

where I have fixed \(m\) and \(c\). This is a very simple model, where I know the answer for the two variables I would like to fit. We can fit this with lamatrix.

Our first question is what model is reasonable to fit this data. lamatrix has the following model options

constant

polynomial

step

sinusoid

Gaussian

spline

From this list, we know that our data is drawn from a polynomial.

Model Class#

lamatrix provides a Model class to work with. Models are classes that are (usually) initialized with the name(s) of any variables that the model is a function of. These names are strings. Models may have additional keyword arguments that are required on initialization. Models have the following class methods that you will usually interact with

design_matrix: Thedesign_matrixmethod will return the design matrix that describes the model for all the input variables.fit: Thefitmethod takes in data and optionally errors to fit the functional form of the model to dataevaluateTheevaluatemethod will return the best fit of the model to the data

Let’s look at an example

[7]:

from lamatrix import Polynomial

The Polynomial class is a type of Model in lamatrix. We will initialize it with the name of the variable of the polynomial, and the order of the polynomial. Let’s make a model using this class

[8]:

Polynomial('x', order=1)

[8]:

Polynomial(x)[n, 1]

This is an n by 1 polynomial model. Here n indicates the number of points in the variable (x) and 1 indicates how many vectors are in the design matrix. If we increase the order, we will see this number increase

[9]:

Polynomial('x', order=4)

[9]:

Polynomial(x)[n, 4]

We can check the equation of our model object using the equation property

[10]:

Polynomial('x', order=1).equation

[10]:

This latex equation shows the model. Let’s display the latex here:

This is a simple model of some unknown weight \(w_0\) multiplied by \(x\).

To make this model fit our data we will need to include a constant term.

[11]:

from lamatrix import Constant

[12]:

Constant()

[12]:

Constant()[n, 1]

This Model does not require any input, there is no variable. If we print the equation we see it contains only an unknown weight

[13]:

Constant().equation

[13]:

Let’s combine these Models.

[14]:

model = Polynomial('x', order=1) + Constant()

[15]:

model

[15]:

JointModel

Polynomial(x)[n, 1]

Constant()[n, 1]

Now we have combined the model we have a JointModel. Let’s look at the equation now

[16]:

model.equation

[16]:

This is now our first order polynomial, including the offset term. We have two unknown weights: \(w_0\) and \(w_1\) representing the slope and intercept of our model.

Our model contains a design matrix, which is the matrix representation of the equation above. When dotted with a vector of weights (\(\mathbf{w}\)) this gives us a realization of our model. We can access the design matrix inside the Model object. The design_matrix method will return the design matrix. You must input examples of all variables where you want the design matrix to be evaluated. In this case, that is x.

This can be any x that could be generated. Here I’ve generated an short example x vector.

[17]:

x_example = np.arange(-1, 1, 0.25)

model.design_matrix(x=x_example)

[17]:

array([[-1. , 1. ],

[-0.75, 1. ],

[-0.5 , 1. ],

[-0.25, 1. ],

[ 0. , 1. ],

[ 0.25, 1. ],

[ 0.5 , 1. ],

[ 0.75, 1. ]])

This design matrix represents x and a constant.

Now we have the model, let’s fit it to the data. We will input the variables (x) and the data we want to fit.

[18]:

model.fit(x=x, data=y)

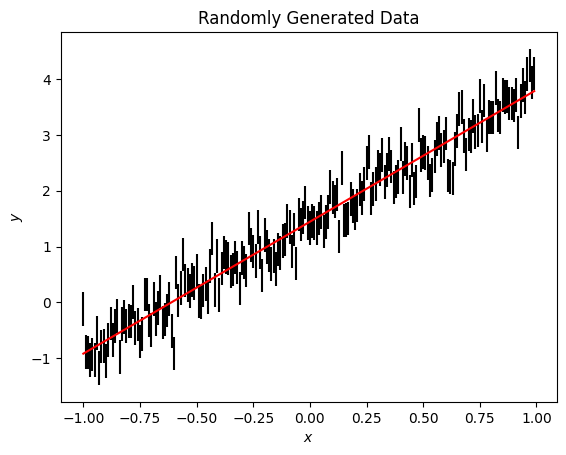

We can plot the model over the data to see the fit

[21]:

fig, ax = plt.subplots()

ax.errorbar(x, y, ye, ls='', c='k')

ax.plot(x, model.evaluate(x=x), c='r')

ax.set(xlabel='$x$', ylabel='$y$', title='Randomly Generated Data');

This is a good fit. To see the results of the fit we must first introduce a new class: Distributions.

There are two occasions inside lamatrix where we require some concept of a distribution.

The prior distribution: this holds our prior belief of the values of best fitting weights. By default, all priors are infintely wide Gaussians (i.e. we have no prior understanding).

The posterior distribution: this holds the best fitting values after we have fit our model to data, informed by the prior.

Both of these are Gaussian distributions in lamatrix. We use the following Python objects to hold these distributions.

Distribution#

In lamatrix all distributions are by definition Gaussian. These are specified by two parameters: the mean and the standard deviation. These are specified as Tuple objects with (mean, standard deviation), e.g.

(0, 1)

Would indicate a Gaussian with mean 0 and standard deviation 1.

Distributions have special properties compared with regular tuples:

meanandstdproperties return the mean and standard deviation as floats respectivelyfreezing/thawing: In

lamatrixyou may want to “freeze” a certain variable to its mean value, i.e. make the standard deviation 0.Distributionclasses have thefreezeandthawmethods to enable this.you can draw a sample from the distribution using the

samplemethod

DistributionContainer#

One Tuple Distribution object is valid for each weight we fit in the model. We must hold many weights to account for all of the components in the model. These are held in a DistributionContainer. This is a special case of a List which has the following methods

meanandstdproperties return the mean and standard deviation asnumpy.NDArray’s respectivelyfreezeandthawmethods as abovesamplemethod as above

Now that we understand distibutions, we can return to the model. To find the best fit of our model, we need to access the posteriors. These are the results of the fit.

[22]:

model.posteriors

[22]:

DistributionContainer

[(2.36, 0.12), (1.445, 0.071)]

These posteriors represent the mean and standard deviation of the best fit weights for our model. We can look at the means

[23]:

model.posteriors.mean

[23]:

array([2.36184521, 1.44454686])

These values match the input \(m\) and \(c\) very closely! These represent our best fitting weights.

Let’s improve our fit by including errors on the data.

[24]:

model.fit(x=x, data=y, errors=ye)

Now we have fit again, let’s look at the mean

[25]:

model.posteriors.mean

[25]:

array([2.36184521, 1.44454686])

The posterior standard deviation now includes a more reasonable estimate of the error on these best fit parameters

[26]:

model.posteriors.std

[26]:

array([0.03674281, 0.021214 ])

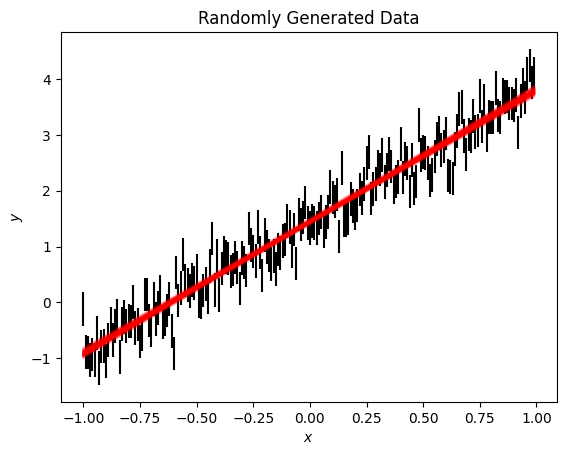

We can plot this new model fit over the data. Here we draw 100 samples of the model, which draws weights from the posterior distribution, rather than using the best fit mean.

[27]:

fig, ax = plt.subplots()

ax.errorbar(x, y, ye, ls='', c='k')

ax.plot(x, np.asarray([model.sample(x=x) for count in range(100)]).T, c='r', alpha=0.1);

ax.set(xlabel='$x$', ylabel='$y$', title='Randomly Generated Data');

Often we want to be able to save a model to share it with colleagues. Model objects are able to save and load models using json files.

[28]:

model.save('output.json')

This saves a juman readable model. Below we show the first 20 lines of this file

[29]:

!head -20 output.json

{

"object_type": "JointModel",

"initializing_kwargs": {},

"priors": {

"mean": [

0,

0

],

"std": [

Infinity,

Infinity

]

},

"posteriors": {

"mean": [

2.361845212485081,

1.4445468615716888

],

"std": [

0.03674280542968608,

The model json can then be loaded back in to lamatrix

[30]:

from lamatrix import load

[31]:

load('output.json')

[31]:

JointModel

Polynomial(x)[n, 1]

Constant()[n, 1]

Finally you may want to include the results of your model as a latex table in your work. You can do this by using the to_latex method, which will also return the equation.

[32]:

print(model.to_latex())

\[f(\mathbf{x}) = w_{0} \mathbf{x}^{1} + w_{1} \]

\begin{table}[h!]

\centering

\begin{tabular}{|c|c|c|}

\hline

Coefficient & Posterior & Prior \\\hline

w & $2.36 \pm 0.04$ & $0 \pm \infty$ \\\hline

w & $1.44 \pm 0.02$ & $0 \pm \infty$ \\\hline

\end{tabular}

\end{table}

That concludes the basics of lamatrix. To see how to use the package for more advanced cases check out the next tutorial.