Introduction to lamatrix: Multi-dimensional models#

lamatrix provides you with ways to build linear models for any shape input data or vectors. This means you can fit high dimensional data with high dimensional inputs. Let’s take a look at fitting some simple 2D data.

[6]:

import numpy as np

import matplotlib.pyplot as plt

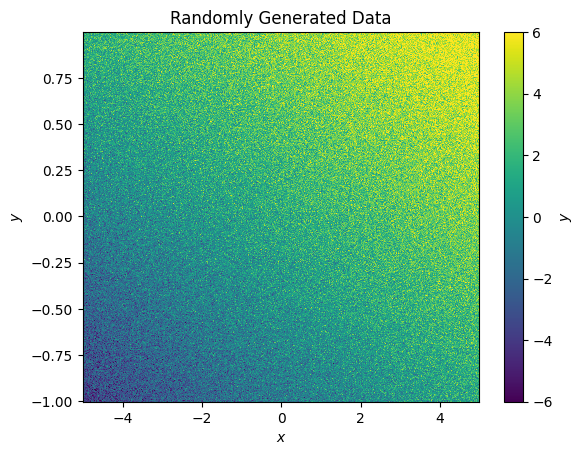

Below we create some fake data to fit. Here we’ve created a simple 2D, 1st order polynomial in two dimensions, x and y. We’ve then included some noise.

[7]:

x = np.arange(-5, 5, 0.02)

y = np.arange(-1, 1, 0.005)

X, Y = np.meshgrid(x, y)

w = [1.3598, 0.4589, 2.495, -0.0234]

Z = w[0] + w[1] * X + w[2] * Y + w[3] * X * Y + np.random.normal(0, 1.2, size=X.shape)

Ze = np.ones_like(Z) * 1.2

[8]:

fig, ax = plt.subplots()

im = ax.pcolormesh(x, y, Z, vmin=-6, vmax=6)

ax.set(xlabel='$x$', ylabel='$y$', title='Randomly Generated Data');

cbar = plt.colorbar(im, ax=ax)

cbar.set_label("$y$")

Let’s try to fit a polynomial to this data so that we can retrieve the best fit weights. Firstly, we will create a 1st order polynomial in each of the dimensions we are interested in.

[9]:

from lamatrix import Polynomial, Constant

[10]:

px = Constant() + Polynomial('x', order=1)

py = Constant() + Polynomial('y', order=1)

[11]:

model = py * px

Let’s take a look at the model

[12]:

model

[12]:

JointModel

Constant()[n, 1]

Polynomial(x)[n, 1]

Polynomial(y)[n, 1]

CrosstermModel(y, x)[n, 1]

This looks great, we’ve included a constant term, the polynomials for each dimension, and a CrosstermModel object which holds the cross terms between the X and Y polynomials. Let’s look at the equation

[13]:

model.equation

[13]:

This looks like what we expect!

Note here I have included the terms in a certain order. This will impact the order of the posteriors from the fit. If you change the order of operations you will still be able to fit the data, but you should be careful to understand the order of the posterior weights.

Let’s fit this to data!

[14]:

model.fit(x=X, y=Y, data=Z, errors=Ze)

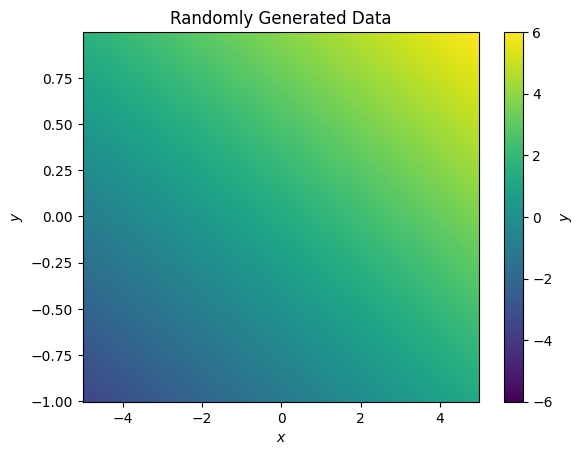

The fit runs with no errors, let’s look at the best fit

[15]:

fig, ax = plt.subplots()

im = ax.pcolormesh(x, y, model.evaluate(x=X, y=Y), vmin=-6, vmax=6)

ax.set(xlabel='$x$', ylabel='$y$', title='Randomly Generated Data');

cbar = plt.colorbar(im, ax=ax)

cbar.set_label("$y$")

This is an excellent fit to our data, let’s look at the posteriods

[16]:

model.posteriors.mean

[16]:

array([ 1.35338088, 0.45856768, 2.49436478, -0.02409446])

This is within errors of our input weights

[17]:

w

[17]:

[1.3598, 0.4589, 2.495, -0.0234]

Let’s imagine now instead we want to include a different set of inputs. Let’s assume we have a vector of x and y positions and we want to evaluate the model. We can simply evaluate at those points

[18]:

model.evaluate(x=np.arange(-2, 2, 0.2), y=np.random.uniform(-1, 1, size=20))

[18]:

array([-0.00776975, -0.73467785, 2.3140337 , -1.72610951, -0.91777086,

3.30512991, 3.02062454, 0.19043161, 1.66923261, 0.4609963 ,

3.79964524, 3.33714304, 3.90930434, 1.23816664, -0.65516974,

0.7174055 , 1.70571638, -0.3687958 , 2.27321786, 2.14645696])

You can combine any number of high dimensional vectors and datasets here as needed. When you fit, you must pass in data and inputs that all have the same shape.